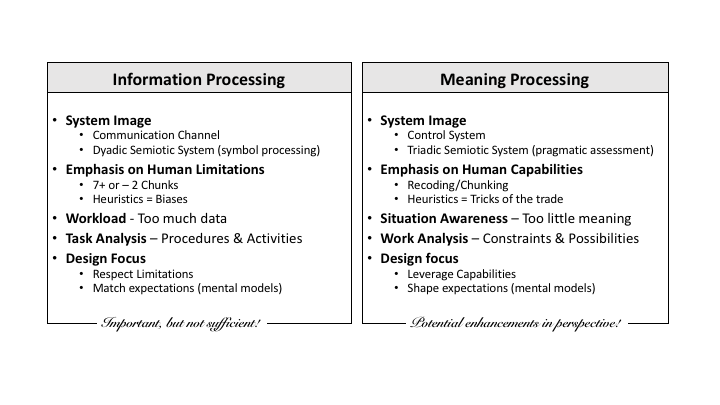

This is the six and final post in a series of blogs to examine some of the implications of a CSE perspective on sociotechnical systems and the implications for design. The table below summarizes some of the ways that CSE has expanded our vision of humans. In this blog the focus will be on design implications.

One of the well known mantras of the Human Factors profession has been:

Know thy user.

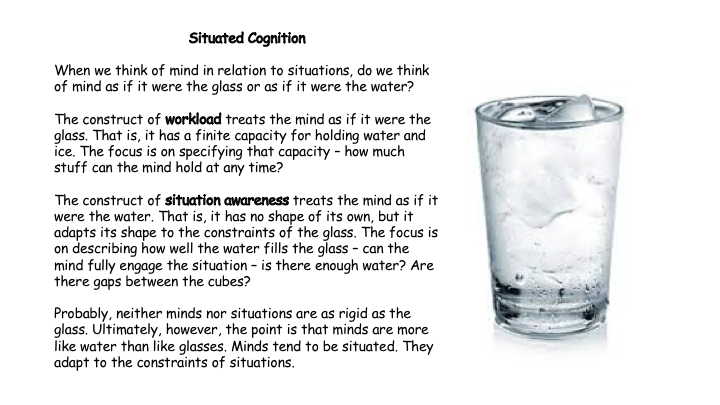

This has typically meant that a fundamental role for human factors has been to make sure that system designers are aware of computational limitations (e.g., perceptual thresholds, working memory capacity, potential violations of classical logic due to reliance on heuristics) and expectations (e.g., population stereotypes, mental models) that bound human performance.

It is important to note that these limitations have generally been validated with a wealth of scientific research. Thus, it is important that these limitations be considered by designers. It is important to design information systems so that relevant information is perceptually salient, so that working memory is not over-taxed, and so that expectations and population stereotypes are not violated.

The emphasis on the bounds of human rationality, however, tends to put human factors at the back of the innovation parade. While others are touting the opportunities of emerging technologies, HF is apologizing for the weaknesses of the humans. This feeds into a narrative in which automation becomes the 'hero' and humans are pushed into the background as the weakest link - a source of error and an obstacle to innovation. From the perspective of the technologists - the world would be so much better if we could simply engineer the humans out of the system (e.g., get human drivers off the road in order to increase highway safety).

But of course, we know that this is a false narrative. Bounded rationality is not unique to humans - all technical systems are bounded (e.g., by the assumptions of their designers or in the case of neural nets by the bounds of their training/experience). It is important to understand that the bounds of rationality are a function of the complexity or requisite variety of nature. It is the high dimensionality and interconnectedness of the natural world that creates the bounds on any information processing system (human or robot/automaton) that is challenged to cope in this natural world. In nature there are always potential sources of information that will be beyond the limits of any computational system.

The implication for designing sociotechnical systems is that designers need to take advantage of whatever resources are available to cope with this requisite variety of nature. For CSE, the creative problem solving abilities of humans and human social systems is considered to be one of the resources that designers should be leveraging. Thus, the muddling of humans (i.e., incrementalism) described by Lindblom is NOT considered to be a weakness, but rather a strength of humans.

Most critics of incrementalism believe that doing better usually means turning away from incrementalism. Incrementalists believe that for complex problem solving it usually means practicing incrementalism more skillfully and turning away from it only rarely. (C.E. Lindblom, 1979, p. 517)

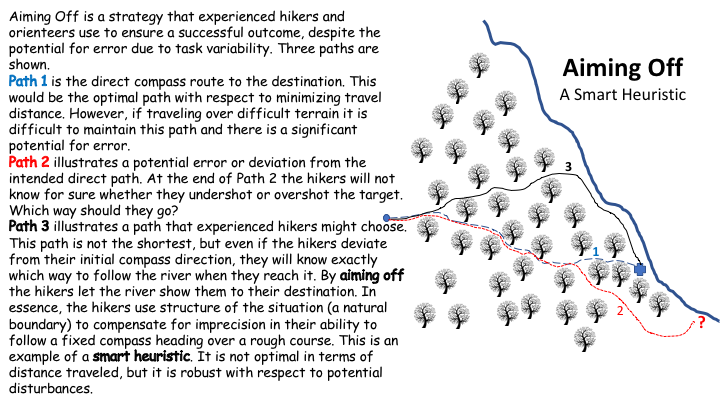

Thus, for designing systems for coping with complex natural problems (e.g., healthcare, economics, security) it is important to appreciate the information limitations of all systems involved (human and otherwise). However, this is not enough. It is also important to consider the capabilities of all systems involved. One of these capabilities is the creative, problem solving capacity of smart humans and human social systems. A goal for design needs to be to support this creative capacity by helping humans to tune into the deep structure of natural problems so that they can skillfully muddle through with the potential of discovering smart solutions to problems that even the designers could not have anticipated.

In order to 'know thy user' it is not sufficient to simply catalog all the limitations. Knowing thy users also entails appreciating the capabilities that users offer with respect to coping with the complexities of nature.

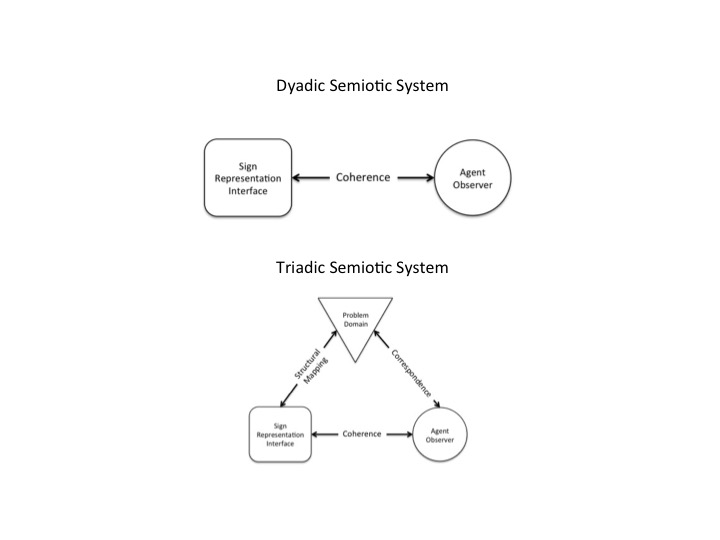

This often involves constructing interface representations that shape human mental models or expectations toward smarter more productive ways of thinking. In other words, the goal of interface design is to provide insights into the deep structure or meaningful dimensions of a problem, so that humans can learn from mistakes and eventually discover clever strategies for coping with the unavoidable complexities of the natural world.

Lindblom, C.E. (1979). Still muddling, not yet through. Public Administration Review, 39(6), 517-526.