There is a prevailing assumption within Western cultures of a dichotomy, where mind and matter refer to fundamentally different (or ontologically distinct) kinds of phenomenon that work according to different principles or laws. On the one hand there is the world of Matter - that constitutes the realm of physical phenomena and that behaves according to the Laws of Physics (e.g., Laws of Motion, Laws of Thermodynamics, etc.). On the other hand is the world of Mind that constitutes the realm of mental phenomena and that behaves according to a completely different set of laws or principles (e.g., psychological or information processing principles).

This is sometimes referred to as the Mind/Body problem - suggesting that our bodies are subject to the Laws of Physics, but that our Minds are subject to different laws related to psychology or information. In essence, we have a world of hardware and a world of software and that these two worlds are fundamentally different, operating according to different laws/principles, and requiring separate sciences.

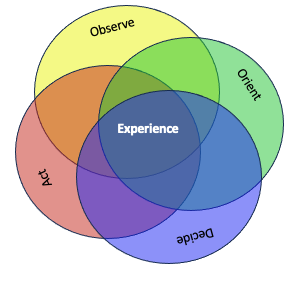

However, this belief is not universal, and it has been rejected by some notable philosophers/scientists (e.g., William James). James conceived of experience as a unified whole that included both physical (objective) and mental (subjective) constraints.

Just so, I maintain, does a given undivided portion of experience, taken in one context of associates, play the part of a knower, of a state of mind, of 'consciousness'; while in a different context the same undivided bit of experience plays the part of a thing known, of an objective 'content.' In a word, in one group it figures as a thought, in another group as a thing. And, since it can figure in both groups simultaneously we have every right to speak of it as subjective and objective, both at once. (James 1912, Essay I)

Further James argued for a single, unified science that focused on the relations that constituted the totality of experience resulting from the combined impact of the physical and the mental constraints. In our book, "A Meaning Processing Approach to Cognition" Fred Voorhorst and I make the case that there are three duals that are fundamental to a unified science of experience. Each dual reflects specific relations between mental (subjective) and physical (objective) constraints.

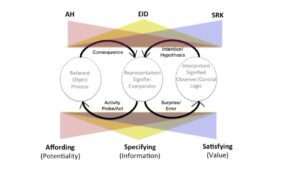

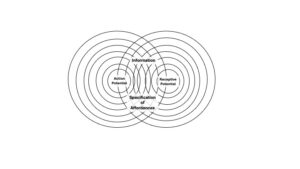

The first dual is a concept introduced by James Gibson that has gained increased acceptance from the design community. This is the construct of affordance or affording. An affordance reflects the potential for action that reflects properties of an agent in relation to properties of objects. The prototypical example is the affordance of graspability that reflects the relation between an agents effectors (e.g., hands) and the size, orientation, and shape of objects. Another example of an affordance that reflects the agency of a human-technology system is land-ability. This reflects the properties of a surface relative to the capabilities of an aircraft. Different aircraft (fixed wing versus rotary wing) are capable of landing successfully on different types of surfaces. In control theoretic terms, affordance is closely related to the concept of controllability - reflecting the joint constraints of the agent and the situation on acting.

The second dual is a concept that is related to James Gibson's concept of information (e.g., the optical array) and that was the focus of Eleanor Gibson's research on perceptual learning and attunement. We refer to this dual as specificity or specifying. This refers to the relation between the sensory/perceptual capabilities or perspicacity of an agent and the structure available in the physical medium (e.g., the light, acoustics, tactile properties, or the properties of a graphical interface). For example, due to the angular projection of light, the motions of an observer relative to the surfaces in the environment are well specified in the optical array (e.g., the imminence of collision, or the angle of approach to a runway). In control theoretic terms, specificity is closely related to the construct of observability - reflecting the quality of the feedback with respect to specifying the states of a process being controlled. E.J. Gibson's work on perceptual learning reflects the insight that it typically takes experience for organisms to discover and attend to those structures in the medium that are discriminating or diagnostic with respect to different functions or intentions. For example, pilots must learn to pick up the optical features that specify a safe approach to landing.

The third dual is a concept that James Gibson included in his definition of affordance, that we feel is better isolated as a third dual. We refer to this dual as satisfaction or satisfying. This refers to the relation between the consequences of an action and the intentions/desires or health of an actor. If the consequences are consistent with the desires of an actor or if they are healthy, then the relation is satisfying. If the consequences are counter to the desires or unhealthy then they are unsatisfying. For example, a food that is healthy or a safe aircraft landing would be satisfying. But a food that was poisonous or a crash landing would be unsatisfying. In control theoretic terms, satisfaction is closely related to the cost function that might be used to determine the quality or optimality of a control solution. In essence, the satisfying dimension reflects the quality of outcomes.

We see these three duals as essential properties of any control system or sensemaking organization. That is, the quality of control, skill, situation awareness, or of sensemaking will depend on whether the affordances (action capabilities) and potential consequences are well specified by the available feedback, which in turn should enable the development of quality (if not optimal) control/decision making that reduces surprises and risks, and increases the potential for satisfying outcomes.