When discussing decision making people often make a contrast between rational decision making (Spock) and intuitive decision making (Kirk). Rational decision making is typically described as deliberate, analytical thinking that can be modeled using classical logic and mathematics (e.g., probability theory). Although humans don't often live up to the ideals of logic or mathematical models - they generally accept the prescriptions of these types of models as the ideals that we should aspire to.

Intuitive decision making is a bit more mystical and is not easily described using classical logic or mathematical models. In fact, the experts who often seem to make decisions intuitively can have difficulty describing how they reached their decision, often attributing their choices to a 'gut feeling.' In many naturalistic situations, experts sometimes don't even recognize that they are making decisions. Rather, they seem to just 'see' or 'recognize' what actions are demanded by a situation with little (if any) conscious deliberation.

The qualitative distinctions lead many to conclude that there are two, distinct types of decision 'mechanisms.' And there is much discussion about which is better and/or which is more or less appropriate for specific situations. For example, many see analytical thinking as the ideal, if the situation allows it; and in contrast intuitive decision is implicitly seen to be 'irrational.' But they might see intuitive decisions as a necessary compromise when there is time pressure that does not permit more analytical thinking.

In contrast, others see intuitive decision making as the hall mark of expertise allowing them to be both faster and more accurate than novices. With extensive experience in a domain, experts seem to be able to automatically tune into solutions with little deliberation. This contrasts with novices in the same domain, who require extensive deliberation and still often fail to perform as well as the experts.

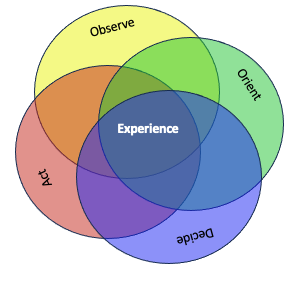

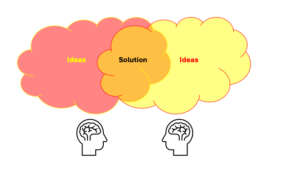

Rather than seeing these as two distinct mechanisms - perhaps decision making is ideally a both/and situation, involving coordination between deliberation (rational analysis) and recognition (intuition/feel). As an analogy - consider that most people utilize two eyes to 'see' the world. Each eye has a different field of view. Does it make sense to consider which view is more accurate? Does the combination allow people to see the world more accurately than either eye alone (e.g., to see depth)? Note that there are conditions known as Binocular Vision Dysfunction in which the eyes are not able to coordinate and fuse the separate perspectives to see the world as a single image and/or to see depth.

Perhaps, we should not be framing decision making as either rational or intuitive. Maybe, we should be talking about 'binocular decision making,' where the quality of the process is a function of the coordination and integration of rational and intuitive processes to get a deeper understanding of a situation. While each of the two perspectives provides different strengths and weaknesses, the combination could be more than the sum of the different strengths and may actually yield emergent insights (e.g., more depth), needed for success in a complex world.