Yes - following on the previous post - I do believe that Cognitive Systems Engineering (CSE) generates juice that is well worth the squeeze. However, I think that it is important to distinguish between CSE as an academic enterprise exploring basic issues about the nature of work and the nature of human cognition versus a component of a design process.

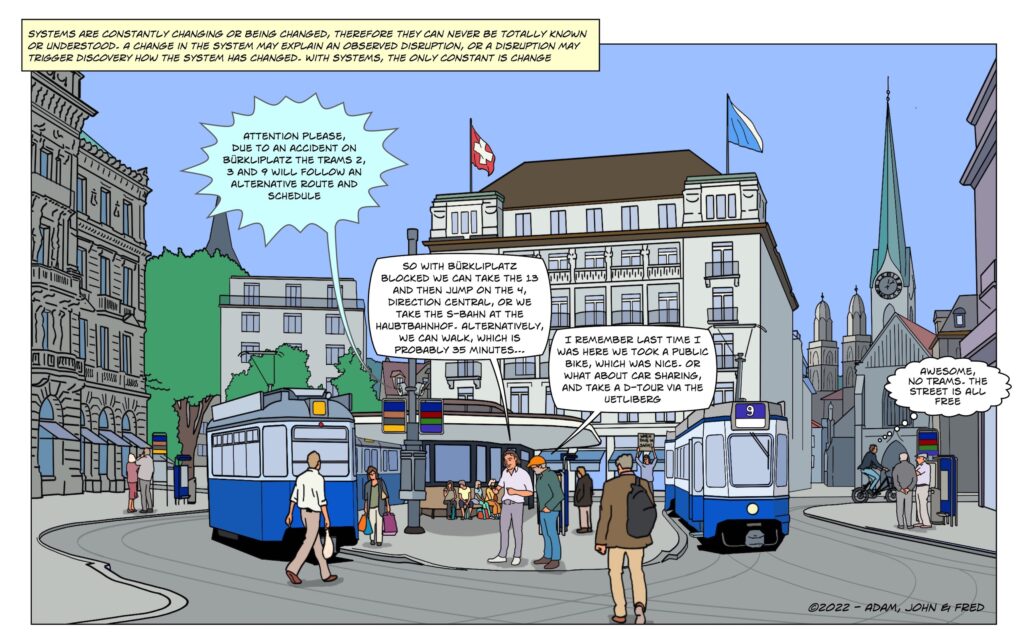

When implemented in a design process, a CSE work analysis is sometimes mistakenly implemented as a prerequisite to other aspects of design (e.g., prototyping). The problem with this is that work analysis is never done. Work domains are not static - they are constantly changing due to new opportunities and new challenges associated with evolving technologies and operational contexts. Thus, there are always new depths to explore and often one question leads to even more questions. If you delay other aspects of design until the work analysis is complete - nothing will ever get built.

Thus, work analysis should be implemented as a co-requisite to other aspects of design. For example, customers or operators often have a difficult time articulating why they make certain choices, how new technologies might be helpful, or what they need to work more effectively without some concrete context. One way to provide that context is to create concrete scenarios (e.g., critical incidents). Another way is to provide them with a concrete model or prototype that they can manipulate. Even crude models (e.g., back of the napkin sketches or paper prototypes) can be very effective. In the process of reviewing a scenario or interacting with a prototype customers will sometimes be able to recognize and articulate new insights about the utility of the prototype or potential problems with it. This is reflected in Michael Schrage's concept of 'Serious Play.' in essence, prototypes can help to engage operators and allow them to participate in the idea generation process. This can be a valuable source of knowledge about a work domain. Prototypes can greatly enhance knowledge elicitation and work analysis.

So, it is not a question of doing work analysis OR building design prototypes - success typically requires BOTH work analysis AND prototyping. And further, there is no fixed precedence. Ideally, the work analysis should be tightly coupled with more concrete aspects of design (e.g., wire framing, prototyping). In this coupling, work analysis can be both feedforward (generating hypotheses) and feedback (evaluating operator responses to concrete implementations).

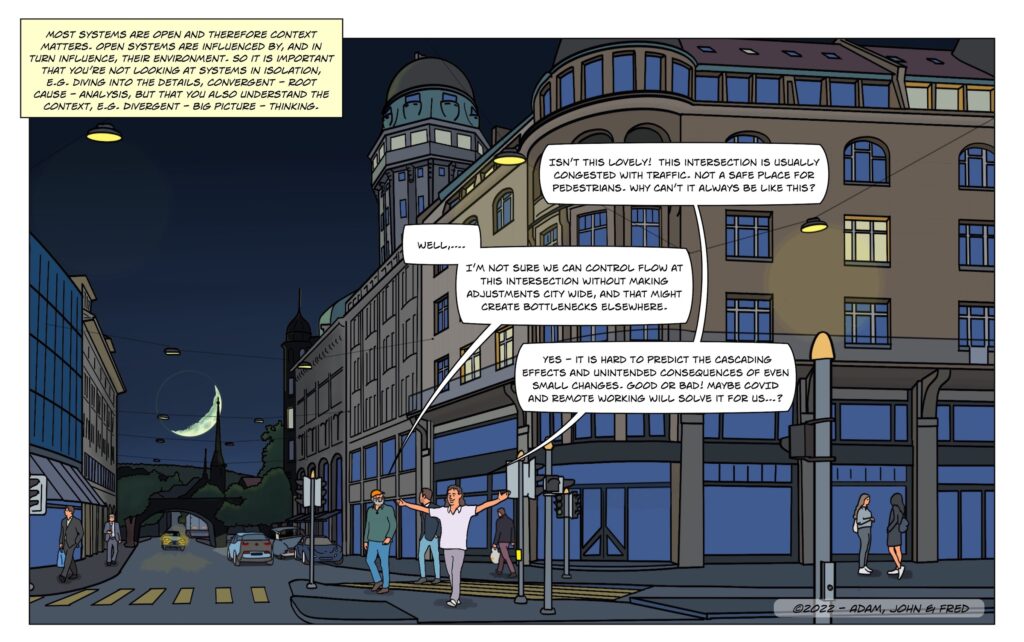

With the modern explosion of technologies for managing complex information, work domains are rapidly changing. This requires a CSE perspective to assess the changing opportunities and risks and to generate alternative hypotheses for how to leverage these technologies more effectively to reduce risks and to stay competitive. This ongoing work analysis can be a resource for designing new interfaces and decision tools, for designing alternative concepts of operation, and for developing more effective training processes. However, design decisions can not wait for this work analysis to be complete, because it will never be complete.

In sum, CSE is both an academic enterprise and a field of practice. As an academic enterprise it focuses on understanding cognition situated in the context of the complexities of work environments. As such, it often challenges the conventional wisdom of a cognitive science based on reductive methods that utilize laboratory puzzles to decouple information processing stages from the dynamics of natural situations. As a field of practice, CSE has to function as a co-requisite of other components of design to probe the complexities of work domains. To be effective in practice, cognitive systems engineers have to learn to be team players and they must be able to coordinate and integrate the work analysis processes with the other design processes.

To be effective in practice cognitive systems engineers have to function on interdisciplinary design teams as humble experts, rather than know-it all academics who want to lead the parade.