The term “Cognitive Engineering” was first suggested by Don Norman and it is the title of a chapter in a book (User-Centered System Design -1986) that he co-edited with Stephen Draper. Norman (1986) writes:

Cognitive Engineering, a term invented to reflect the enterprise I find myself engaged in: neither Cognitive Psychology, nor Cognitive Science, nor Human Factors. It is a type of applied Cognitive Science, trying to apply what is known from science to the design and construction of machines. It is a surprising business. On the one hand, there actually is a lot known in Cognitive Science that can be applied. On the other hand, our lack of knowledge is appalling. On the one hand, computers are ridiculously difficult to use. On the other hand, many devices are difficult to use - the problem is not restricted to computers, there are fundamental difficulties in understanding and using most complex devices. So the goal of Cognitive Engineering is to come to understand the issues, to show how to make better choices when they exist, and to show what the tradeoffs are when, as is the usual case, an improvement in one domain leads to deficits in another (p. 31).

Norman (1986) continues to specify two major goals that he had as a Cognitive Systems Engineer:

- To understand the fundamental principles behind human action and performance that are relevant for the development of engineering principles in design.

- To devise systems that are pleasant to use - the goal is neither efficiency nor ease nor power, although these are all to be desired, but rather systems that are pleasant, even fun: to produce what Laurel calls “pleasurable engagement” (p. 32).

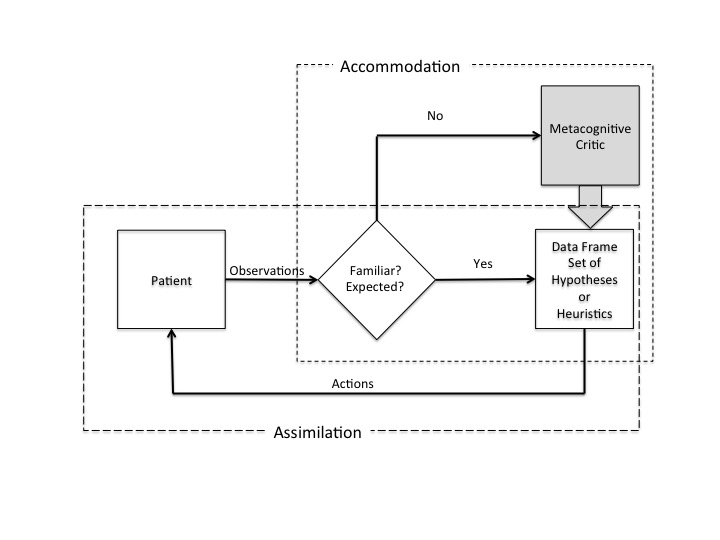

Rasmussen (1986) was less interested in pleasurable engagement and more interested in safety - noting the accidents at Three Mile Island and Bhopal as important motivations for different ways to think about human performance and work. As a controls engineer Rasmussen was concerned that the increased utilization of centralized, automatic control systems in many industries (particularly nuclear power) was changing the role of humans in those systems. He noted that the increased use of automation was moving humans “from the immediate control of system operation to higher-level supervisory tasks and to long-term maintenance and planning tasks” (p. 1). Because of his background in controls engineering, Rasmussen understood the limitations of the automated control systems and he recognized that these systems would eventually face situations that their designers had not anticipated (i.e., situations for which the ‘rules’ or ‘programs’ embedded in these systems were inadequate). He knew that it would be up to the human supervisors to detect and diagnose the problems that would thus ensue and to intervene (e.g., creating new rules on the fly) to avert potential catastrophes.

The challenge that he saw for CSE was to improve the interfaces between the humans and the automated control systems in order to support supervisory control. He wrote:

Use of computer-based information technology to support decision making in supervisory systems control necessarily implies an attempt to match the information processes of the computer to the mental decision processes of an operator. This approach does not imply that computers should process information in the same way as humans would. On the contrary, the processes used by computers and humans will have to match difference resource characteristics. However, to support human decision making and supervisory control, the results of computer processing must be communicated at appropriate steps of the decision sequence and in a form that is compatible with the human decision strategy. Therefore, the designer has to predict, in one way or another, which decision strategy an operator will choose. If the designer succeeds in this prediction, a very effective human-machine cooperation may result; if not, the operator may be worse off with the new support than he or she was in the traditional system … (p. 2).

Note that the information technologies that were just beginning to change the nature of work in the nuclear power industry in the 1980’s when Rasmussen made these observations have now become significant parts of almost every aspect of modern life - from preparing a meal (e.g., Chef Watson), to maintaining personal social networks (e.g., Facebook and Instagram), to healthcare (e.g., electronic health record systems), to manufacturing (e.g., flexible, just-in-time systems), to shaping the political dialog (e.g., President Trump’s use of Twitter). Today, most of us have more computing power in our pocket (our smart phones) than was available for even the most modern nuclear power plants in the 1980s. In particular, the display technology in 1980s was extremely primitive relative to the interactive graphics that are available today on smart phones and tablets.

A major source of confusion that has arisen in defining this relatively new field of CSE has been described in a blog post by Erik Hollnagel (2017):

The dilemma can be illustrated by considering two ways of parsing CSE. One parsing is as C(SE), meaning cognitive (systems engineering) or systems engineering from a cognitive point of view. The other is (CS)E, meaning the engineering of (cognitive systems), or the design and building of joint (cognitive) systems.

From the earliest beginnings of CSE, Hollnagel and Woods (1982; 1999) were very clear about what they thought was the appropriate parsing. Here is their description of a cognitive system:

A cognitive system produces “intelligent action,” that is, its behavior is goal oriented, based on symbol manipulation and used knowledge of the world (heuristic knowledge) for guidance. Furthermore, a cognitive system is adaptive and able to view a problem in more than one way. A cognitive system operates using knowledge about itself and the environment, in the sense that it is able to plan and modify its actions on the basis of that knowledge. It is thus not only data driven, but also concept driven. Man is obviously a cognitive system. Machines are potentially if not actually, cognitive systems. An MMS [Man-Machine System] regarded as a whole is definitely a cognitive system (p. 345)

Unfortunately, there are still many who don’t quite fully appreciate the significance of treating the whole sociotechnical system as a unified system where the cognitive functions are emergent properties that depend on coordination among the components. Many have been so entrained in classical reductionistic approaches that they can’t resist the temptation to break the larger sociotechnical system into components . For these people, CSE is simply systems engineering techniques applied to cognitive components within the larger sociotechnical system. This approach fits with the classical disciplinary divisions in universities where the social sciences and the physical sciences are separate domains of research and knowledge. People generally recognize the significant role of humans in many sociotechnical systems (most notably as a source of error); and they typically advocate that designers account for the ‘human factor’ in the design of technologies. However, they fail to appreciate the self-organizing dynamics that emerge when smart people and smart technologies work together. They fail to recognize that what matters with respect to successful cognitive functioning of this system are emergent properties that cannot be discovered in any of the components. Just as a sports team is not simply a collection of people, a sociotechnical system is not simply a collection of things (e.g., humans and automation).

The ultimate challenge of CSE as formulated by Rasmussen, Norman, Hollnagel, Woods and others is to develop a new framework where the ‘cognitive system’ is a fundamental unit of analysis. It is not a collection of people and machines - rather it is an adapting organism with a life of its own (i.e., dynamic properties that arise from relations among the components). CSE reflects a desire to understand these dynamic properties and to use that understanding to design systems that are increasingly safe, efficient, and pleasant to use.