What does an Ecological Interface Design (or an EID) look like?

As one of the people who has contributed to the development of the EID approach to interface design, I often get a variation of this question (Bennett & Flach, 2011). Unfortunately, the question is impossible to answer because it is based on a misconception of what EID is all about. EID does not refer to a particular form of interface or representation, rather it refers to a process for exploring work domains in the hope of discovering representations to support productive thinking about complex problems.

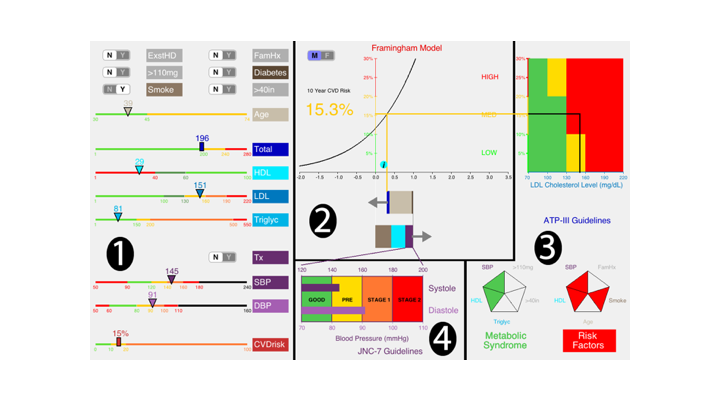

Consider the four interfaces displayed below. Do you see a common form? All four of these interfaces were developed using an EID approach. Yet, the forms of representation appear to be very different.

What makes these interfaces "ecological?"

The most important aspect of the EID approach is a commitment to doing a thorough Cognitive Work Analysis (CWA) with the goal of uncovering the deep structures of the work domain (i.e., the significant ecological constraints) and to designing representations in which these constraints provide a background context for evaluating complex situations.

- In the DURESS interface, Vicente (e.g., 1999) organized the information to reflect fundamental properties of thermodynamic processes related to mass and energy balances.

- The TERP interface, designed by Amelink and colleagues ( 2005) was inspired by innovations in the design of autopilots based on energy parameters (potential and kinetic energy). The addition of energy parameters helped to disambiguate the relative role of the throttle and stick for regulating the landing path.

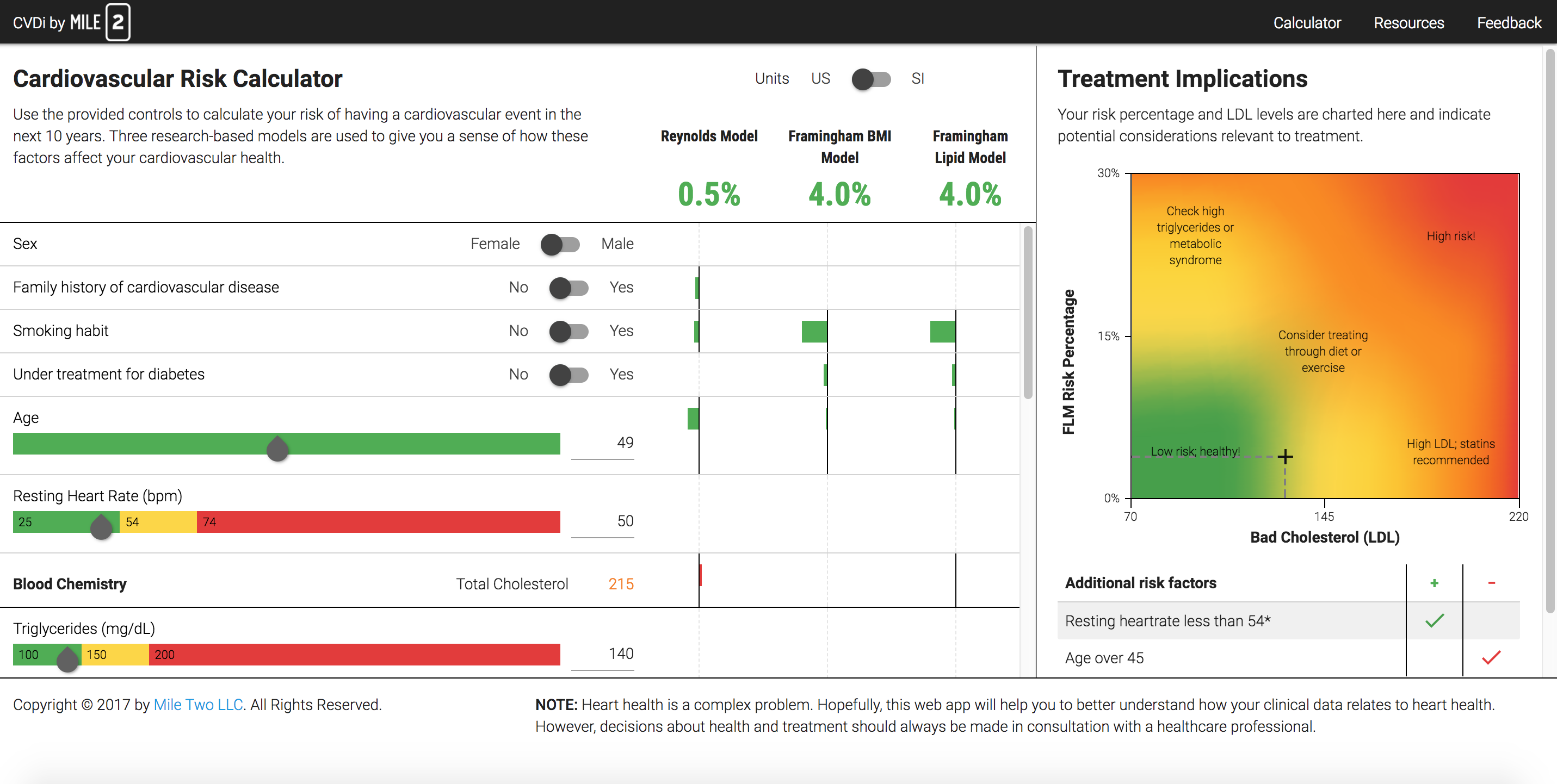

- In the CVD interface (McEwen et al., 2014) published models of cardiovascular risk (e.g. Framingham and Reynolds Risk Models) became the background for evaluating combinations of clinical values (e.g., cholesterol levels, blood pressure) and for making treatment recommendations.

- In the RAPTOR interface, Bennett and colleagues (2008) included a Force Ratio graphic to provide a high-level view of the overall state of a conflict (e.g., who is winning).

Although interviews of operators can be a valuable part of any CWA, these are typically not sufficient. With EID the goal is not to match the operators' mental models, but rather to shape the mental models. For example, the Energy Path in the TERP interface was not inspired by interviews with pilots. In fact, most pilots were very skeptical about whether the TERP would help. The TERP was inspired by insights from Aeronautical Engineers who discovered that control systems that used energy states as feedback resulted in more stable automatic control solutions.

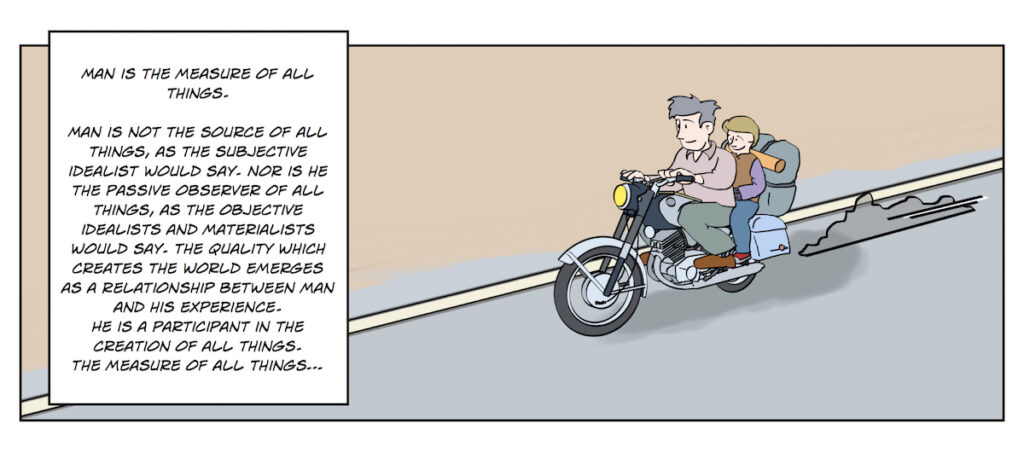

With EID the goal is not to match the operators' mental models, but rather to shape the mental models toward more productive ways of thinking.

A second common aspect of representations designed from an EID perspective is a configural organization. Earlier research on interface design was often framed in terms of an either/or contrast between integral versus separable representations. This suggested that you could EITHER support high-level relational perspectives (integral representations) OR provide low-level details (separable representations), but not both. The EID process is committed to a BOTH/AND perspective, where it is assumed that it is desirable (even necessary) to provide BOTH access to detailed data AND to higher order relations among the variables. In a configural representation the detailed data is organized in a way to make BOTH the detailed data AND more global, relational constraints salient.

For example, in the CVD interface, all of the clinical values that contribute to the cardiovascular risk models are displayed and in addition to presenting a risk estimate (that is an integral function of multiple variables) the relative contribution of each variable is also shown. This allows physicians to not only see the total level of risk, but also to see how much each of the different values is contributing to the risk level.

In configural representations a goal is to leverage the powerful ability of humans to recognize patterns that reflect high-order relations while simultaneously allowing access to specific data as salient details nested within the larger patterns.

The EID process is committed to a both/and perspective, where it is assumed that it is desirable (even necessary) to provide both access to detailed data (the particular) and to higher order relations among the variables (the general).

A third feature of the EID process is the emphasis on supporting adaptive problem solving. The EID approach is based on the belief that there is no single, best way or universal procedure that will lead to a satisfying solution in all cases. Thus, rather than designing for procedural compliance, EID representations are designed to help people to explore a full range of options so that it is possible for them to creatively adapt to situations that (in some cases) could not have been anticipated in advance. Thus, representations designed from an EID perspective typically function as analog simulations that support direct manipulation. By visualizing global constraints (e.g., thermodynamic models or medical risk models) the representations help people to anticipate the consequences of actions. These representations typically allow people to test and evaluate hypotheses by manipulating features of the representation before committing to a particular course of action or, at least, before going too far down an unproductive or dangerous path of action.

Rather than designing for procedural compliance, EID representations are designed to help people to explore a full range of options so that it is possible for them to creatively adapt to situations that (in some cases) could not have been anticipated in advance.

It should not be too surprising that the forms of representations designed from the EID perspective may be very different. This is because the domains that they are representing can be very different. The EID approach does not reflect a commitment to any particular form of representation. Rather it is a commitment to providing representations that reflect the deep structure of work domains, including both detailed data and more global, relational constraints. The goal is to provide the kind of insights (i.e., situation awareness) that will allow people to creatively respond to the surprising situations that inevitably arise in complex work domains.

Works Cited

Amelink, H.J.M., Mulder, M., van Paassen, M.M., Flach, J.M. (2005). Theoretical foundations for total energy-based perspective flight-path displays for aircraft guidance. International Journal of Aviation Psychology, 15, 205 – 231.

Bennett, K.B., and Flach, J.M. (2011). Display and Interface Design: Subtle Science, Exact Art. London: Taylor & Francis.

Bennett, K.B., Posey, S.M. & Shattuck, L.G. (2008). Ecological interface design for military command and control. Journal of Cognitive Engineering and Decision Making, 2(4), 349-385.

McEwen, T., Flach, J.M. & Elder, N. (2014). Interfaces to medical information systems: Supporting evidence-based practice. IEEE: Systems, Man, & Cybernetics Annual Meeting, 341-346. San Diego, CA. (Oct 5-8).

Vicente, K.J. (1999). Cognitive Work Analysis. Mahwah, NJ: Erlbaum.

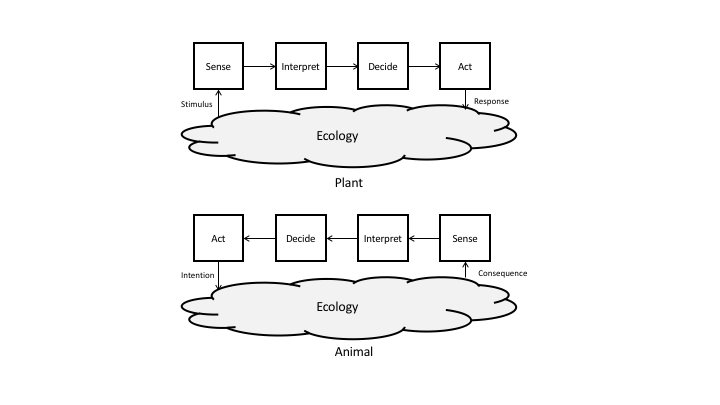

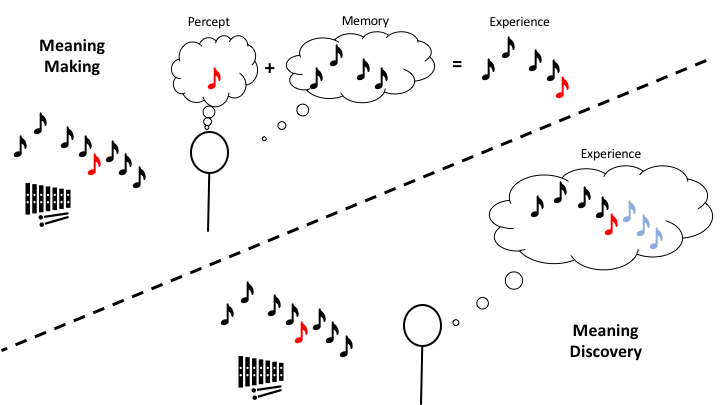

Ever since the Cybernetic Hypothesis was introduced to Psychology, there has been greater appreciation of the "intentional" nature of cognitive systems. Yet, despite this awareness, causal (or stimulus-response) forms of explanation continue to dominate the way many people think about how humans (and other animals) process information. For example, most cognition texts begin with sensation and then follow 'stimulation' through successively deeper levels of processing (perception, decision-making ...).

Ever since the Cybernetic Hypothesis was introduced to Psychology, there has been greater appreciation of the "intentional" nature of cognitive systems. Yet, despite this awareness, causal (or stimulus-response) forms of explanation continue to dominate the way many people think about how humans (and other animals) process information. For example, most cognition texts begin with sensation and then follow 'stimulation' through successively deeper levels of processing (perception, decision-making ...).

I was able to take the MVP that we generated in connection with Tim's dissertation to

I was able to take the MVP that we generated in connection with Tim's dissertation to