Early American Functionalist Psychologists, such as William James and John Dewey, viewed cognition through a Pragmatic lens. Thus, for them cognition involved making sense of the world in terms of its functional significance: What can be done? What will the consequences be? More recently, James Gibson (1979) introduced the word “Affordance” to reflect this functionalist perspective; where the term affordance is used to describe an object relative to the possible actions that can be performed on or with the object and the possible consequences of those actions. Don Norman (1988) has introduced the concept of affordance to designers who have found it to be a useful concept for thinking about how a design is experienced by people.

Formalizing Functional Structure

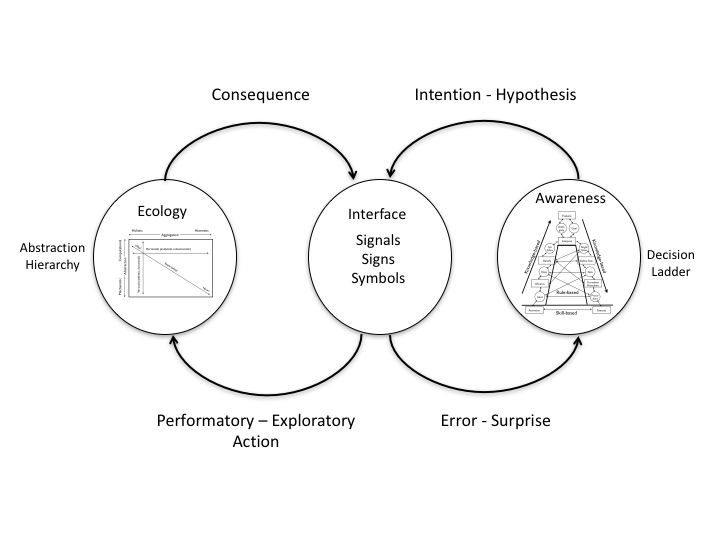

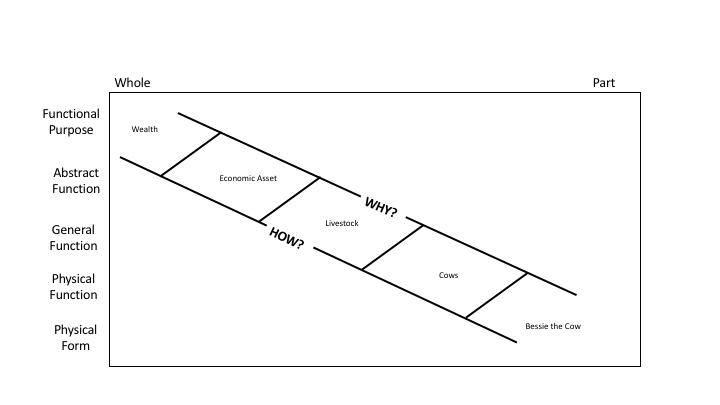

This Functionalist view of the world has been formalized by the philosopher, William Wimsatt (1972), in terms of seven dimensions or attributes for characterizing any object; and by the cognitive systems engineer, Jens Rasmussen (1986), in terms of an Abstraction-Decomposition Space. Figure 1 illustrates some of the parallels between these two methods for characterizing functional properties of an object. The vertical dimension of the Abstraction-Decomposition Space reflects five levels of abstraction that are coupled in terms of a nesting of means-ends constraints. The top level, Functional Purpose, specifies the value constraints on the functional system – what is the ultimate value that is achievable or what is the intended goal or purpose? As you move to lower levels in this hierarchy the focus is successively narrowed down to the specific, physical properties of objects at the lowest Physical Form level of abstraction.

Figure 1. An illustration of how Wimsatt’s functional attributes map into Rasmussen’s Abstraction-Decomposition Space.

An important inspiration for creating the Abstraction-Decomposition Space was Rasmussen’s observations of the reasoning processes of people doing trouble-shooting or fault diagnosis. He observed that the reasoning tended to move along the diagonal in this space. People tended to consider holistic properties of a system at high levels of abstraction (e.g., the primary function of an electronic device) in order to make sense of relations at lower levels of abstraction (e.g., the arrangements of parts). In essence, higher levels of abstraction tended to provide the context for understanding WHY the parts were configured in a certain way. People tended to consider lower levels of abstraction to understand how the arrangements or parts served the higher-level purposes. In essence, lower levels of abstraction provided clues to HOW a particular function would be achieved.

Rasmussen found that in the process of trouble shooting an electronic system, the reasoning tended to move up and down the diagonal of the Abstraction-Decomposition Space. Moving up in abstraction tended to broaden the perspective and to suggest dimensions for selecting properties at lower levels. In essence, the higher level was a kind of filter that determined significance at the lower levels. This filter effectively guided attention and determined how to chunk information and what attributes should be salient at the lower levels. Thus, in the process of diagnosing a fault, experts tended to shift attention across different levels of abstraction until eventually zeroing-in on the specific location of a fault (e.g., finding the break in the circuit or the failed part).

Wimsatt’s formalism for characterizing an object or item in functional terms is summarized in the following statement:

According to theory T, a function of item i, in producing behaviour B, in system S in environment E relative to purpose P is to bring about consequence C.

Figure 1 suggests how Wimsatt’s seven functional attributes of an object might fit within Rasmussen’s Abstraction-Decomposition Space. The object or item (i) as a physical entity corresponds with the lowest level of abstraction and the most specific level of decomposition. The purpose (P) corresponds with the highest level of abstraction at a more global level of decomposition. The Theory (T) and System (S) attributes introduce additional constraints for making sense of the relation between the object and the purpose. Theory (T) provides the kind of holonomic constraints (e.g., physical laws) that Rasmussen considered at the Abstract Function Level. These constraints set limits on how a purpose might be achieved (e.g., the laws of aerodynamics set constraints on how airplanes or wings can serve the purpose of safe travel). The System (S) attributes provide the kind of organizational constraints that Rasmussen considered at the General Function level. These constrains describe the object’s role in relation to other parts of a system in order to serve the higher-level Purpose (P) (e.g., a general function of the wing is to generate lift). The Behavior (B) attribute fits with Rasmussen’s Physical Function level that describes the physical constraints relative to the object’s role as a part of the organization (e.g., here the distinction between fixed and rotary wings comes into play). The Environment (E) attribute crosses levels of abstraction as a way of providing the ‘context of use’ for the object. Finally, the Consequence (C) attribute provides the specific effect that the object produces relative to achieving the purpose (e.g., the specific lift coefficient for a wing of a certain size and shape).

While the details of the mapping in Figure 1 might be debated, there seems to be little doubt that the formalisms suggested by Wimsatt and Rasmussen are rooted in very similar intuitions about how the process of making sense of the world is rooted in a functionalist perspective in which ‘meaning’ is grounded in a network of means-ends relations that associates objects with the higher-level purposes and values that they might serve. This connection between ‘meaning’ and higher levels of abstraction has also been recognized by S.I. Hayakawa with his formalism of the Abstraction Ladder.

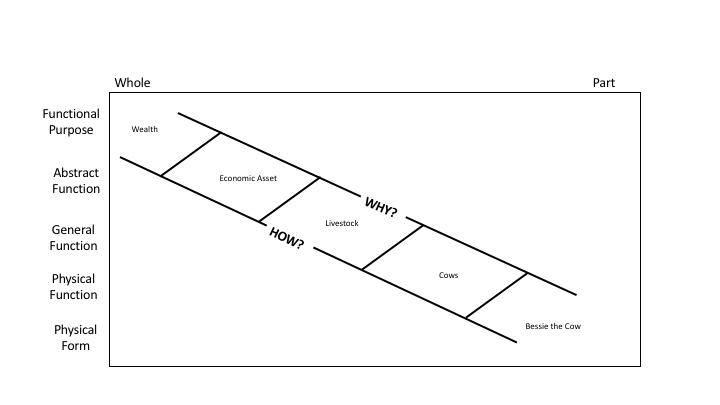

Hayakawa used the case of Bessie the Cow to illustrate how higher levels of abstraction provide a broader context for understanding the meaning of a specific object in relation to progressively broader systems of associations (See Figure 2).

Figure 2. An illustration of how Hayakawa’s Abstraction Ladder maps into Rasmussen’s Abstraction-Decomposition Space.

Figure 2 illustrates how the distinctions that Hayakawa introduced with his Abstraction Ladder might map to Rasmussen’s Abstraction-Decomposition Space. It has been noted by Hayakawa and others that building engaging narratives involves moving up and down the Abstraction Ladder (or equivalently moving along the diagonal in the Abstraction Decomposition Space). This is consistent with Rasmussen’s observations about trouble-shooting. Thus, the common intuition is that the process of sensemaking is intimately associated with unpacking the different layers of relations between an object and the larger functional networks or contexts in which it is nested.

The Nature of Expertise

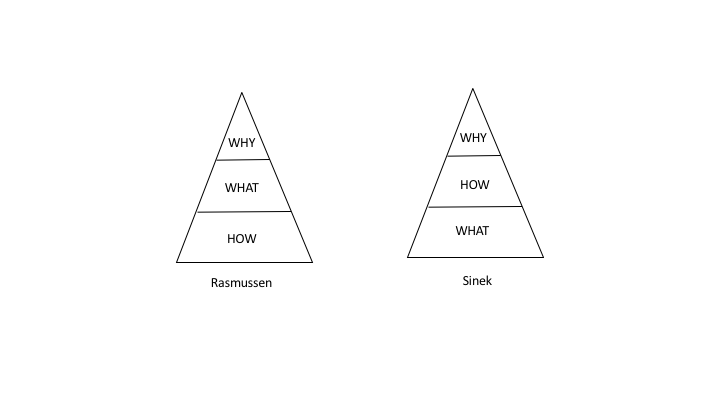

The parallels between expert behaviors in trouble shooting and fault diagnosis by Rasmussen and observations about the implications of Hayakawa’s Abstraction Ladder for constructing interesting narratives might help to explain why case-based learning (Bransford, Brown & Cocking, 2000) is particularly effective for communicating expertise and why narrative approaches for knowledge elicitation (e.g., Klein, 2003; Kurtz & Snowden, 2003) are so effective for uncovering expertise. Even more significantly, perhaps the ‘intuitions’ or ‘gut feel’ of experts may reflect a higher degree of attunement with constraints at higher levels of abstraction. That is, while journeymen may know what to do and how to do it, they may not have the deeper understanding of why one way is better than another (e.g., Sinek, 2009) that differentiates the true experts in a field. In other words, the ‘gut feel’ might reflect the ability of experts to appreciate the coupling between objects and actions with ultimate values and higher-level purposes. Further, this link to value and purpose may have an important emotional component (e.g., Damasio, 1999). This suggests that expertise is not simply a function of knowing more, it may also require caring more.

Conclusions

As Wieck (1995) noted, an important aspect of sensemaking is what Schön (1983) called problem setting. Weick wrote:

When we set the problem, we select what we will treat as “things” of the situation, we set the boundaries of our attention to it, and we impose upon it a coherence which allows us to say what is wrong and in what directions the situation needs to be changed. Problem setting is a process in which, interactively, we name the things to which we will attend and frame the context in which we will attend to them (Weick, 1995, p. 9).

The fundamental point is that the construct of function as reflected in the formalisms of Wimsatt, Rasmussen, and Hayakawa may provide important clues into the nature of how people set the problem as part of a sensemaking process. In particular, the diagonal in Rasmussen’s Abstraction-Decomposition space may provide clues for how people parse the details of complex situations using filters at different layers of abstraction to ultimately make sense relative to higher functional values and purposes.

Thus, here are some important implications:

- A functionalist perspective provides important insights into the sensemaking process.

- This is the common intuition underlying the formalisms introduced by Gibson, Wimsatt, Rasmussen, and Hayakawa.

- Sensemaking involves navigating across levels of abstraction and levels of detail to identify functional or means-ends relations within a larger network of associations between objects (parts) and contexts (wholes).

- Links between higher levels of abstraction (values, purposes) and lower levels of abstraction (general functions, components and behaviors) may reflect the significance of couplings between emotions, knowledge, and skill.

- The various formalisms described here provide important frameworks for understanding any sensemaking process (e.g., fault diagnosis, storytelling, or intel analysis) and have important implications for both eliciting knowledge from experts and for representing information to facilitate the development of expertise through training and interface design.

Key Sources

- Bransford, J. D., Brown, A. L., and Cocking, R. (2000). How People Learn, National Academy Press, Washington, DC.

- Damasio, A. (1999). The Feeling of What Happens: Body and emotion in the making of consciousness. Orlando, FL: Harcourt.

- Flach, J.M. & Voorhorst, F.A. (2016). What Matters: Putting common sense to work. Dayton, OH: Wright State Library.

- Gibson, J.J. (1979). The Ecological Approach to Visual Perception. New York: Houghton Mifflin.

- Hayakawa, S.I. (1990). Language in Thought and Action. 5th New York: Houghton Mifflin Harcourt.

- Klein, G. (2003). Intuition at Work. New York: Doubleday.

- Kurtz, C.F. & Snowden, D.J. (2003). The new dynamics of strategy: Sense-making in a complex and complicated world. IBM Systems Journal, 42, 462-483.

- Norman, D.A. (1988). The Psychology of Everyday Things. New York: Basic Books.

- Rasmussen, J. (1986). Information Processing and Human-Machine Interaction. New York: North-Holland.

- Schön, D.A. (1983). The Reflective Practitioner. New York: Basic Books.

- Sinek, S. (2009). Start with Why: How great leaders inspire everyone to take action. New York: Penguin.

- Weick, K.E. (1995). Sensemaking in Organizations. Thousand Oaks, CA: Sage.

- Wimsatt, W.C. (1972). Teleology and the logical structure of function statements. Hist. Phil. Sci., 3, no. 1, 1-80.