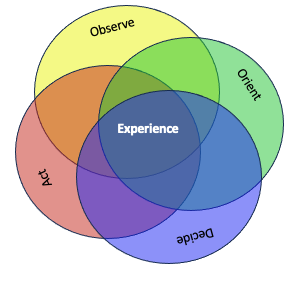

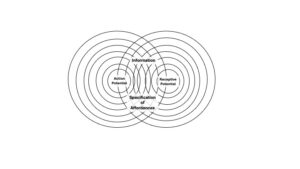

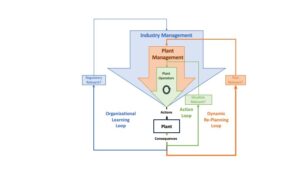

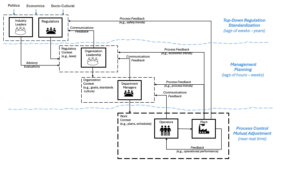

Most organisms and essentially all organizations have multiple layers of interconnected perception-action loops. And it will be generally true that the loops all operated at different time constants, have differential access to information, and have differential levels of authority. One way to think about the coupling across authority levels is that higher-levels set the bounds (or the degrees of freedom) for activity at lower levels. In this context, a key attribute of an organization is the tightness of the couplings between different levels.

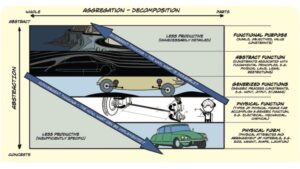

For example, the classic Scientific Management approach involves a very tight coupling across levels. With this approach, higher levels (e.g., management) is responsible for determining the 'right way' to work and the management levels have the responsibility to implement training and reward systems to ensure that the lower levels (operators - workers) strictly adhere to the prescribed methods. This is an extremely tight coupling leaving workers very little degrees of freedom, as they are typically punished for deviating from the "one best way" prescribed by management. In essence, the organization functions as a clockwork mechanism. This approach can be successful in static, predictable environments, where the assumptions and models of managers are valid. However, in a changing, complex world (e.g., VUCA environments) this approach will be extremely brittle - because the cycle times for the upper levels of the system will always be too slow to keep pace with the changing environment. This approach will surely fail in any highly competitive environment, with intelligent, adaptive competitors.

One way to loosen the coupling between levels is to replace the "one best way" with a playbook, or a collection of smart mechanisms that are designed to address different situations that the organization might face. Typically, higher levels develop the playbook and are responsible for training the plays and for rewarding/punishing workers as a function of how well they are at selecting the right play for the situation and implementing that play.

The coupling can be relatively tight if the playbook is treated as a prescription such that any deviations or variations from the plays are punished, especially when negative results ensue. Or the coupling can be looser if the plays are treated as suggestions, deviations (e.g., situated adaptations) are expected or even encouraged, and the lower levels are not punished for unsuccessful adaptations.

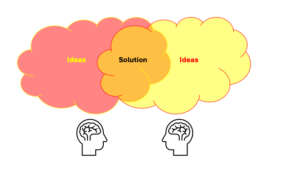

A still looser coupling is an approach of Mission Command. With Mission Command higher levels in the organization set objectives and values, but they leave it to lower levels of the organization to determine how best to achieve those objectives given changing situations. This approach requires high levels of trust across levels in an organization. Higher levels have to trust in the competence and motivations of the lower levels, and lower levels have to trust that higher levels will have their backs so they will not be blamed or punished when well-motivated adaptations are not successful.

Also, Elinor Ostrum's construct of polycentric governance describes how loosely coupled social systems self-organize to manage share resources in a way that avoids the tragedy of the commons. Note that Ostrum's work contradicts the common notion that top-down control is essential to prevent competition between local interests from resulting in complete exhaustion of a resource. Her research discovered many situations where coordination and cooperation emerged bottom-up without being imposed by a centralized, external authority.

These are simply some examples of different levels of tightness in the couplings between levels intended as landmarks within a continuum of possibilities. On one end of the continuum are tightly coupled organizations where the couplings across levels are like the meshing of gears in a clock. As couplings become looser the organization becomes less machine-like and more organismic. Metaphors that are often used for looser couplings include coaching and gardening. In organizations with looser couplings higher levels introduce constraints (e.g., guidance, suggestions, resources), but don't determine activities. The higher levels tend the garden, letting the plants (workers) free to grow on their own.

In an increasingly complex world - organizations with tight couplings across levels will tend to be brittle and vulnerable to catastrophic failures due to an inability to keep pace with changing situations. When couplings across levels are looser, the potential for bottom-up innovation and self-organization increases. However, if the coupling is too loose - there is an increasing danger for inefficiencies, conflict, and chaos. Thus, organizations must continuously balance the tightness of the couplings trading off top-down authority and control to increase the capacity for exploration and innovation (adaptive self-organization) at the operational level. In a complex world, loosening the couplings can allow the organization to muddle more skillfully.