Three paramedics are driving along a rural road in central Ohio when a truck in front of them suddenly seems to go out of control causing several other cars to crash, then hitting some pedestrians who were skating along the road and eventually hitting a tree. The paramedics get out to survey the scene and two of them each go to different victims and begin treating them, the third paramedic surveys the situation and heads back to the car, where he remains throughout the event.

What is he doing in the car?

In fact, after the event was over, the other two paramedics were puzzled, and ask him why he did not immediately begin treating the other victim? His response was "which other victims?" It turns out that neither of the other two paramedics realized that there were more than three victims.

What was the third paramedic doing in the car? He was taking incident command. He realized that for some of the victims to survive it would be essential to get them to trauma hospitals, where they could get more extensive treatment, within the "golden hour." He realized, that to ensure that all the victims were saved they would need to get ambulances and a care flight helicopter into the scene as quickly as possible. He was on the radio calling for support and providing them directions so that they could get to the rural scene as quickly as possible. This included identifying a potential landing site for the care flight helicopter.

While he was in the car, a number of other people stopped to offer help, including a nurse. He was able to direct these volunteers. Asking the nurse to attend to one of the injured and directing another person to attend to the truck driver who was wandering from his truck in a daze.

After the incident, this paramedic explained to the other two, "Yes, I could have started to treat one of the victims, but I wanted to make sure that all the victims survived. And I realized that to do that, we needed more resources and somebody would have to take command and coordinate these resources."

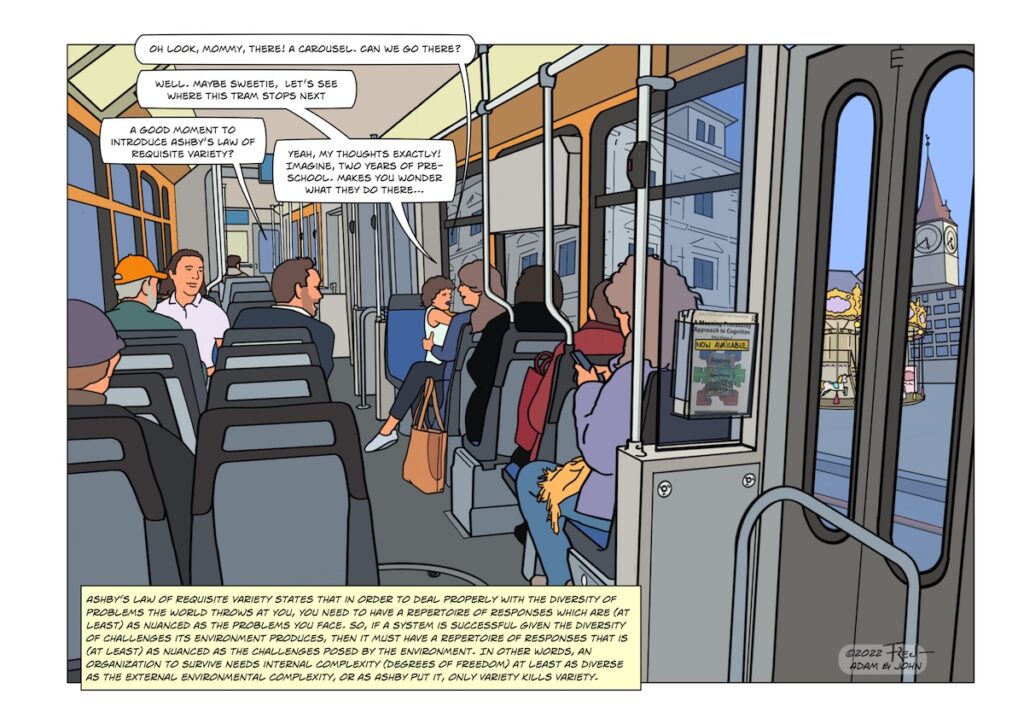

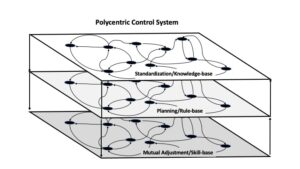

This story illustrates the functions related to three different layers in a polycentric control system.

- At the mutual adjustment level, two of the paramedics, the nurse, and other volunteers were directly acting to address the immediate demands of the situation.

- At the planning level, the third paramedic was functioning as an incident commander. He wasn't directly treating patients, but he was attending to larger patterns and trends in order to anticipate needs and to coordinate the resources that would ultimately be critical for achieving a satisfying outcome.

- And the reason this paramedic took this role was because he had been trained in the National Incident Management System which had been developed based on generations of fire fighting experience with large forest fires. This has become a national standard and many fire and police departments are required to have NIMS training. This establishment and training of a standard reflects contributions at the Standardization or Knowledge-based layer of the emergency organizations. Additionally, the idea of a 'Golden Hour' is a principle based on extensive experiences in emergency medicine. The Standardization Layer reflects a capacity to integrate across an extensive history of past events to pull out principles that provide guideposts (structure, constraints) for organizing operations at the other levels.

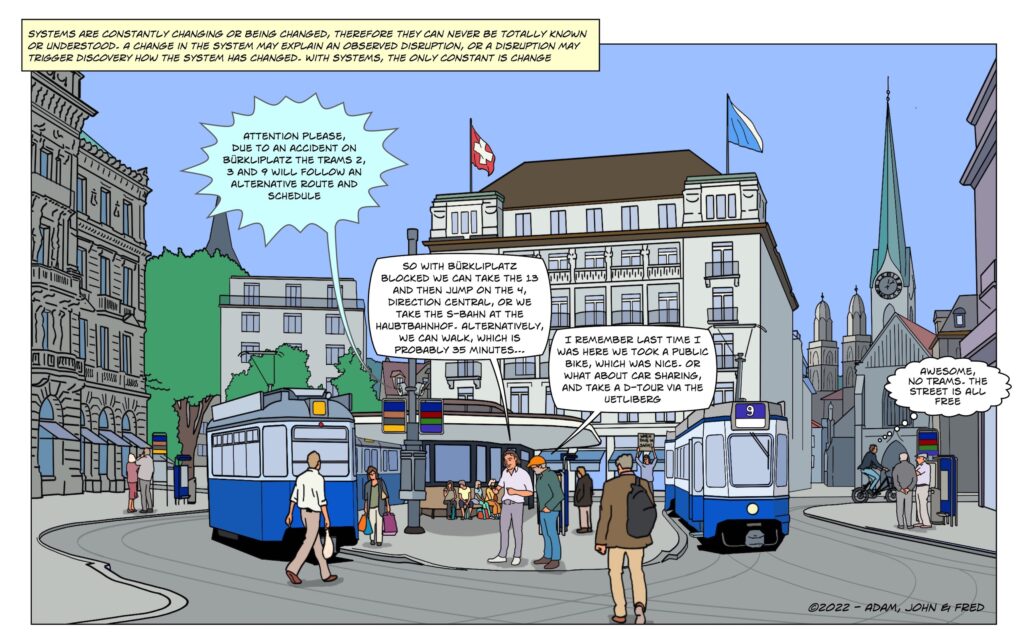

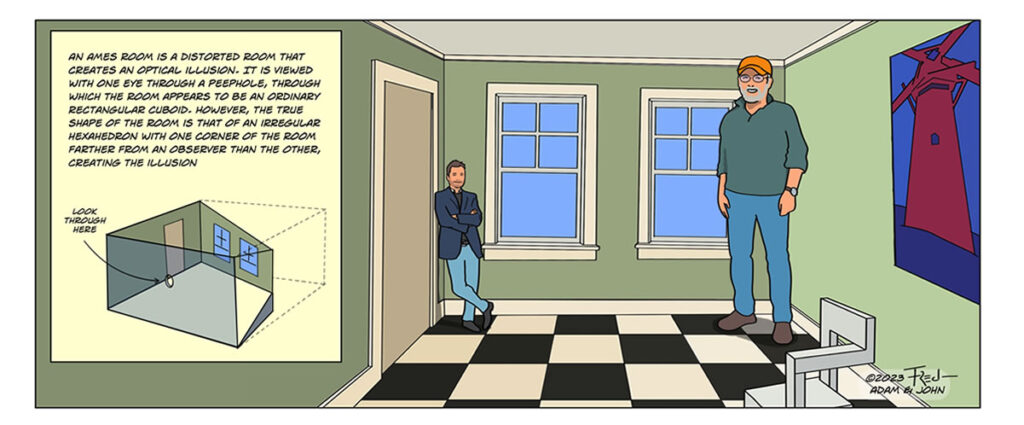

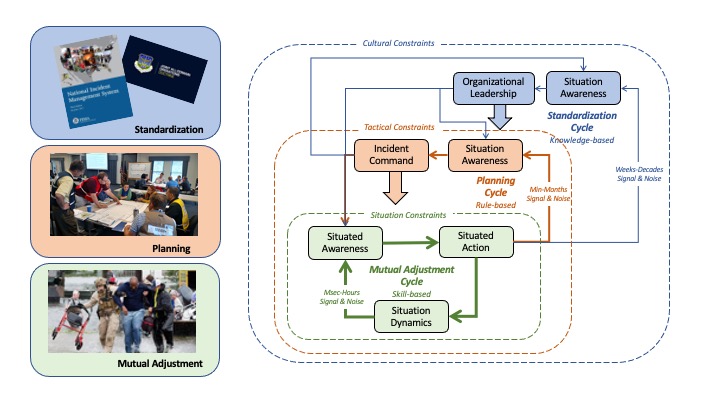

The figure below is an alternative way to represent a polycentric control system to the layered diagram used in prior essays. This representation was developed based on observations and interviews with emergency response personnel. Here the layers are illustrated as nested control loops. These loops are linked through communications (the thin lines) but also through the propagation of constraints (the block arrows), in which outer loops shape or structure the possibilities within the inner loops (e.g., standard practices, command intent, a plan, distributing resources). The coordination between layers is critical to achieving satisfactory results and this coordination depends both on communications within and between layers and the propagation of constraints (sometimes articulated as common ground, shared expectations, shared mental models, or culture).

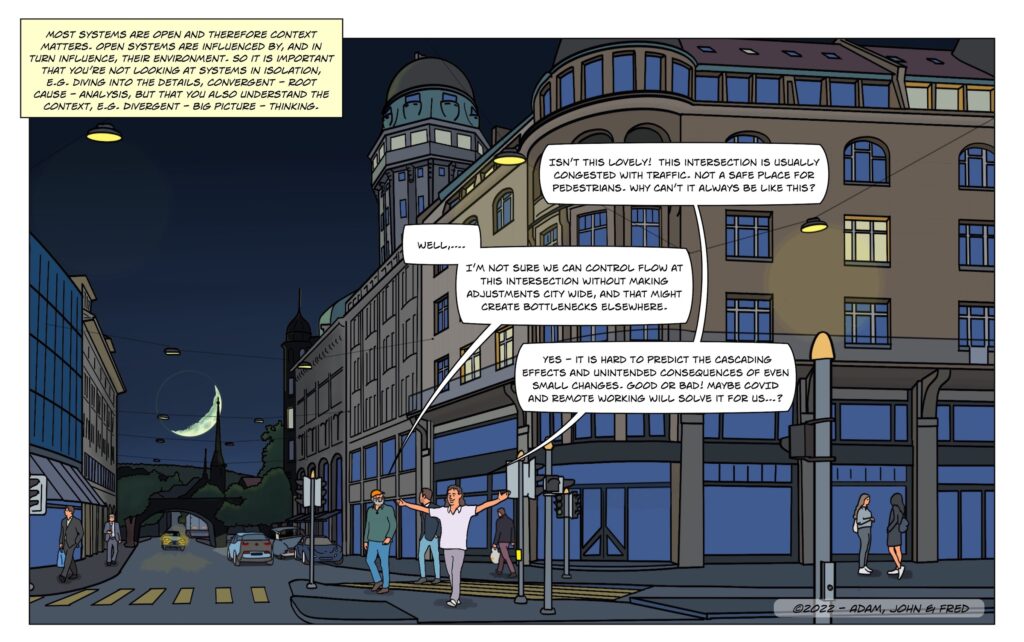

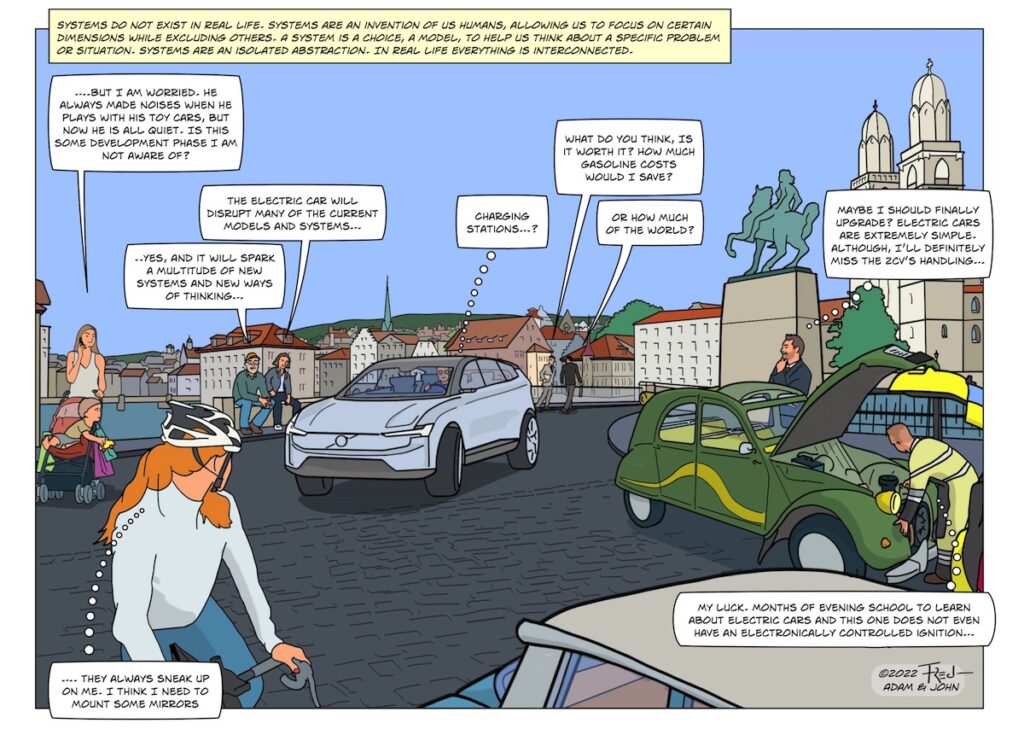

Note that neither the previous representation showing layers or the present representation showing nested control loops is complete. Each representation makes some aspects of the dynamic salient - while hiding other aspects of the dynamic and perhaps carrying entailments that are misleading with respect to the actual nature of the dynamic. An assumption of general systems thinking is that there is no perfect representation or model. Thus, it is essential to take multiple perspectives on nature in order to discover the invariants that matter - to distinguish the signal from the noise.

Three important points:

- First - the power of a polycentric control system relative to addressing complex situations is that each layer has access to potentially essential information that would be difficult or impossible to access at other layers. However, without effective coordination between the layers some of that information will be impotent.

- Second - it is easy for people operating within a layer to have tunnel vision, to take the functions of the other layers for granted, and to underestimate the value that the other layers contribute. For example, it is easy for me and Adam to take Fred's art work for granted. However, when Fred is replaced by someone less skilled or with a different style - suddenly the gap becomes clearly evident.

- Third - be careful not to get trapped in any single perspective, model, or metaphor. Be careful that your models don't become an iron box that you force the natural world to fit into. Be careful not to fall prey to the illusion that any single model will provide the requisite variety that you need to regulate nature and reach a satisfying outcome.

Now, the rest of the story: All the victims from the accident above survived.