First, the span of absolute judgment and the span of immediate memory impose severe limitations on the amount of information that we are able to receive, process, and remember. By organizing the stimulus input simultaneously into several dimensions and successively into a sequence of chunks, we manage to break (or at least stretch) this informational bottleneck.

Second, the process of recoding is a very important one in human psychology and deserves much more explicit attention than it has received. In particular, the kind of linguistic recoding that people do seems to me to be the very lifeblood of the thought processes. Recoding procedures are a constant concern to clinicians, social psychologists, linguists, and anthropologists and yet, probably because recoding is less accessible to experimental manipulation than nonsense syllables or T mazes, the traditional experimental psychologist has contributed little or nothing to their analysis. Nevertheless, experimental techniques can be used, methods of recoding can be specified, behavioral indicants can be found. And I anticipate that we will find a very orderly set of relations describing what now seems an uncharted wilderness of individual differences. George Miller (1956) p. 96-97, (emphasis added).

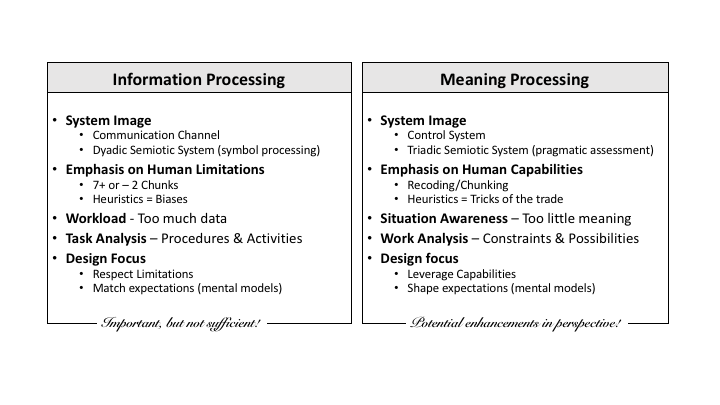

This continues the discussion about the differences between CSE (meaning processing) and more classical HF (information processing) approaches to human performance summarized in the table below. This post will focus on the second line in the table - shifting emphasis from human limitations to include greater consideration of human capabilities.

Anybody who has taken an intro class in Psychology or Human Factors is familiar with Miller's famous number 7 plus or minus 2 chunks that specifies the capacity of working memory. This is one of the few numbers that we can confidently provide to system designers as a spec of the human operator that needs to be respected. However, this number has little practical value for design unless you can also specify what constitutes a 'chunk.'

Although people know Miller's number, few appreciate the important points that Miller makes in the second half of the paper about the power of 'recoding' and the implications for the functional capacity of working memory. As noted in the opening quote to this blog - people have the ability to 'stretch' memory capacity through chunking. The intro texts emphasize the "limitation," but much less attention has been paid to the recoding "capability" that allows experts to extend their functional memory capacity to deal with large amounts of information (e.g., that allows an expert chess player to recall all the pieces on a chess board based on a very short glance).

Cataloguing visual illusions has been a major thrust of research in perception. Similarly, cataloguing biases has been a major thrust of research in decision making. However, people such as James Gibson have argued that these collections of illusions do not add up to a satisfying theory of how perception works to guide action (e.g., in the control of locomotion). In a similar vein, people such as Gerd Gigerenzer have made a similar case for decision making - that collections of biases (the dark side of heuristics) do not add up to a satisfying theory of decision making in every day life and work. One reason is that in every day life, there is often missing and ambiguous data and incommensurate variables that make it difficult or impossible to apply more normative algorithmic approaches.

One result of the work of Tversky and Kahneman in focusing on decision errors is that the term 'heuristic' is often treated as if it is synonymous with 'bias.' Thus, heuristics illustrate the 'weakness' of human cognition - the bounds of rationality. However, Herbert Simon's early work in artificial intelligence treated heuristics as the signature of human intelligence. A computer program was only considered intelligent if it took advantage of heuristics that creatively used problem constraints to find short cuts to solutions - as opposed to applying mathematical algorithms that solved problems by mechanical brute force.

This emphasis on illusions and biases tends to support the MYTH that humans are the weak link in any sociotechnical system and it leads many to seek ways to replace the human with supposedly more reliable 'automated' systems. For example, recent initiatives to introduce autonomous cars often cite 'reducing human errors and making the system safer' as a motivation for pursuing automatic control solutions.

Thus, the general concept of rationality tends to be idealized around the things that computers do well (use precise data to solve complex algorithms and logical puzzles) and it tends to underplay those aspects of rationality where humans excel (detecting patterns and deviations and creatively adapting to surprise/novelty/ambiguity).

CSE recognizes the importance of respecting the bounds of rationality for humans when designing systems - but also appreciates the limitations of automation (and the fact that when the automation fails - it will typically fall to the human to fill the gap). Further, CSE starts with the premise that 'human errors' are best understood in relation to human abilities. On one hand, a catalogue of human errors will never add up to a satisfying understanding of human thinking. On the other hand, a deeper understanding may be possible if the 'errors' are seen as 'bounds' on abilities. In other words, CSE assumes that a theory of human rationality must start with a consideration of how thinking 'works,' in order to give coherence to the collection of errors that emerge at the margins.

The implication is that CSE tends to be framed in terms of designing to maximize human engagement and to fully leverage human capabilities, as opposed to more classical human factors that tends to emphasize the need to protect systems against human errors and limitations. This does not need to be framed as human versus machine, rather it should be framed in terms of human-machine collaboration. The ultimate design goal is to leverage the strengths of both humans and technologies to create sociotechnical systems that extend the bounds of rationality beyond the range of either of the components.